NOMAD ARTIFICIAL INTELLIGENCE SUPPORT

Overview

Nomad supports face detection and person detection directly within the Nomad Platform and administrative interface. Behind the scenes, Nomad relies upon various Artificial Intelligence (AI) services including Amazon Rekognition and Amazon Transcribe to analyze the visual and audio elements of your media.

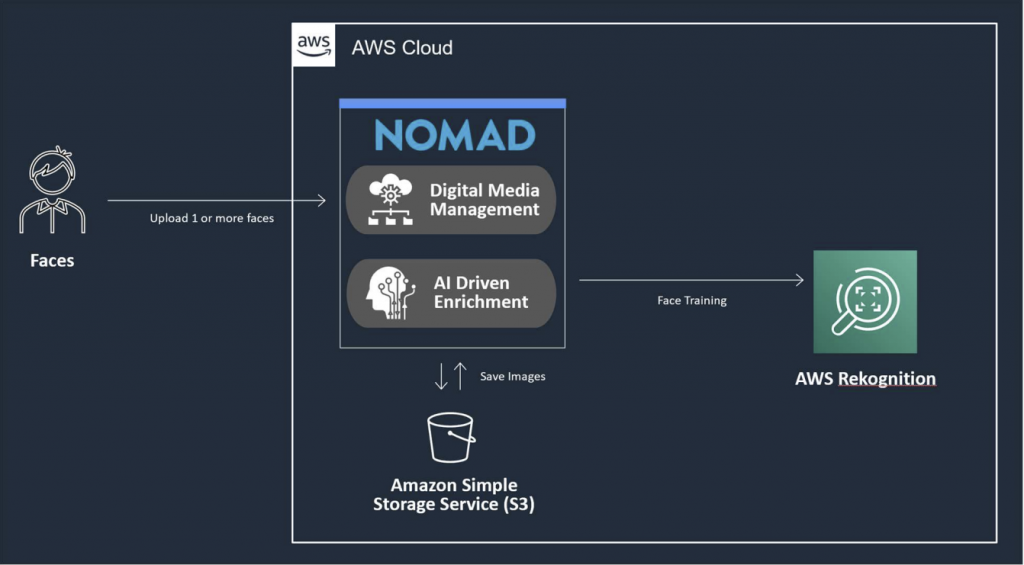

Face Training

Face detection starts by “training” one or more faces as they are uploaded to Nomad and are cataloged by AWS Rekognition. The Nomad interface is then used to “train” the media by giving a name to each group of similar faces.

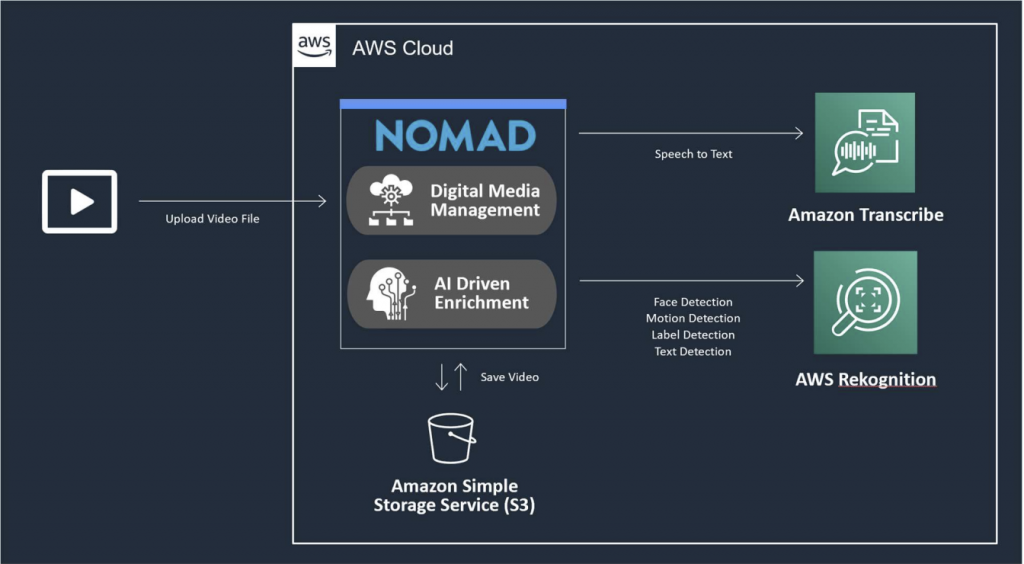

Image and Video Analysis

The face and person detection can now be applied to images and both video assets (files) or live video streams.

For the images and video assets, all matching faces are used to tag the asset with the name of the person in the images or the video as the media is being uploaded. In addition, all persons, activity tracking and other AI services like text detection and label detection are applied as tags to the media.

An important note is that for videos, all tagging is done at the timecode level, so exact analysis within the media is possible.

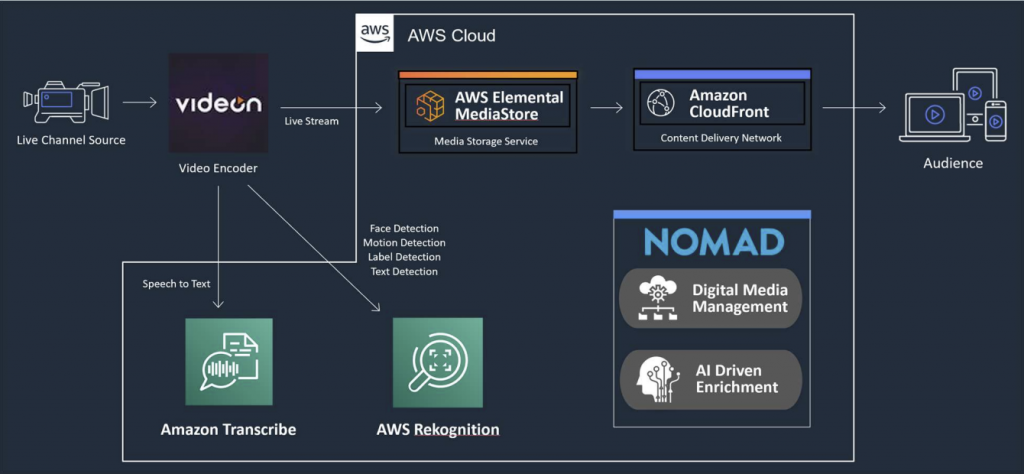

Cloud Live Video Analysis

Live video is analyzed in “near real time” by extracting the frames of video directly on the encoder device and sending them to be processed in the cloud. The same AI technologies that do the processing on the media assets are used for the live analysis like Amazon Transcribe and Amazon Rekognition.

Nomad works directly with the Videon EdgeCaster encoders to extract the video frames on the encoder and then report the AI findings through Nomad. Alerts, business rules or even 3rd party integrations can be triggered by the AI findings like the existence of a specific person or any person, or simply motion detection. Audio cues can be triggered when specific words are heard or even the existence (or non existence) of audio.

Typical analysis latency ranges from 3 seconds – 10 seconds depending on the analysis type and depth of analysis.

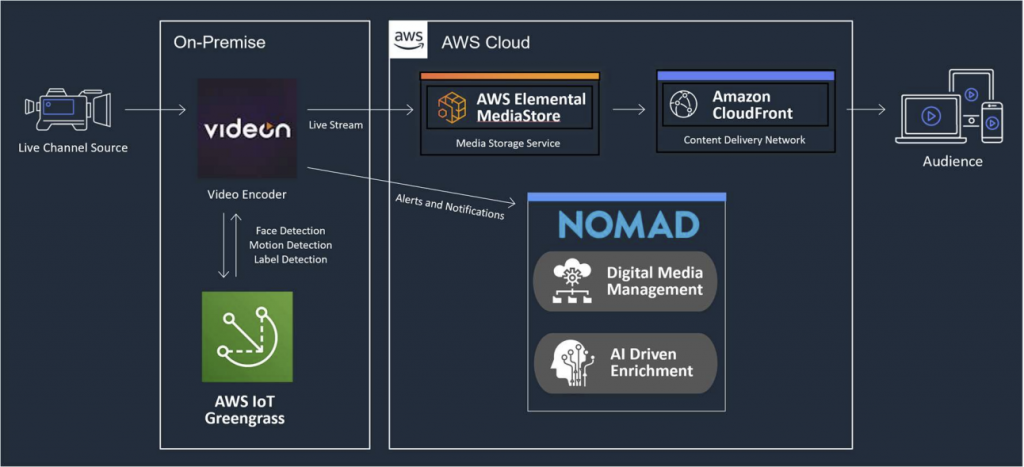

Edge Live Video Analysis

For situations where the video content needs to be analyzed with a lower latency or needs to be performed without a cloud connection, the AWS IoT Greengrass technology can be used.

This allows the AI analysis to performed directly on the EdgeCaster device itself with sub-second latency. The

same alerts, business rules or 3rd party integrations are still available and are triggered identically.

The primary difference is the location of where the AI analysis occurs (on the device instead of in the cloud).